What is ETL Data Pipeline vs ETL Pipeline: 3 Best Key Differences

What is ETL data pipeline and ETL pipeline meaning? data pipeline vs ETL, both play a significant role in moving data between systems. If you’re seeking the advantages of ETL and data pipelines for your company, Integrat.io is the ideal solution. A top cloud-based ETL platform, like Integrat.io empowers your organization with efficient data extraction, transfer, and loading capabilities.

For data warehousing and business intelligence applications, an ETL pipeline is recommended. If you need a flexible solution for diverse data processing tasks, a data pipeline is the better choice.

What is ETL Data Pipeline vs ETL

Data Pipeline vs ETL Pipeline

An ETL pipeline is a set of processes used to move data from one or multiple sources into a database, typically a data warehouse. The term “ETL” stands for Extract, Transform, Load, which describes the three interdependent steps involved in this process:

ETL pipeline meaning:

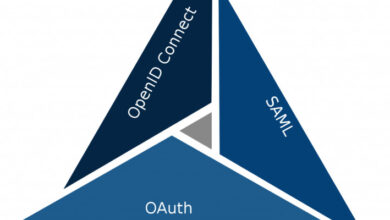

ETL stands for “extract, transform, load.” It refers to the three essential steps involved in data integration, where data is extracted from a source, transformed according to business requirements, and loaded into a different database or system.

What is a Data Pipeline:

Data pipelines typically operate with a continuous flow of data, allowing for the collection, processing, and storage of new data in near real-time.

What is ETL pipeline:

“ETL pipeline” specifically refers to the extraction, transformation, and loading processes, the broader term “data pipeline” encompasses the entire set of processes involved in moving data from one system to another. Data pipelines can include additional steps beyond ETL, and they may not always involve data transformation or loading into a destination database, Let’s dive into data pipeline vs ETL in detail:

ETL pipeline vs data pipeline: When to use

Data Pipeline vs ETL Pipeline

A data pipeline is a collection of tools for transferring data between systems, with or without data transformation. ETL pipelines, a subset of data pipelines, are designed for handling large data volumes and complex transformations. ETL pipelines typically move data in batches on a scheduled basis to the target system.

For data warehousing and business intelligence applications, an ETL pipeline is often the optimal choice. ETL pipelines specialize in extracting, transforming, and loading data from diverse source systems into a target system or data warehouse. They are specifically optimized to handle substantial data volumes and complex data transformations.

Some example use cases for data pipelines vs ETL warehouse are:

- Data warehousing: Often used for data warehousing applications, where data is extracted from source systems

- Business intelligence: where data is extracted from various sources, transformed to provide insights, and loaded into a data warehouse

- Batch processing: Data pipelines ETL are commonly employed for batch processing, handling significant data volumes at scheduled intervals.

In the context of, Data Pipeline vs ETL, On the other hand, data pipelines can encompass various tasks such as data integration, migration, synchronization, and processing for machine learning and artificial intelligence. Modern data pipelines often incorporate real-time processing with streaming computation, enabling continuous data updates. This capability facilitates real-time analytics, and reporting, and can trigger actions in other systems.

If your data processing tasks involve a diverse range of activities like data integration, migration, synchronization, or machine learning and AI processing, a data pipeline is likely a better choice. Data pipelines offer automation and optimized speed, reducing the time and effort required for data movement and processing. Moreover, they can be designed to handle real-time streaming data, enabling near-instantaneous transfer and processing of data.

In the context of data pipeline vs ETL pipelines, ETL excels at structured data transformation in batch processing, while data pipelines handle both structured and unstructured data in real-time. The selection between ETL and data pipelines hinges on an organization’s specific needs, data processing workflows, and the characteristics of data sources and targets involved.

What is ETL in Data Warehouse:

The primary goal of ETL is to prepare data for storage in a data warehouse ETL data lake, or other target system. Cloud-based data warehouses (e.g., AWS, Azure, Snowflake) revolutionized ETL by providing global access and scalability. 1980s: Data warehouses gained popularity, enabling analytics and business intelligence (BI). Common destination systems include data warehouses, data lakes, and databases.

Read More: