What is Kafka Used For: Unleash the Power of Data Streaming

What is Kafka used for? Kafka combines messaging, storage, and stream processing to handle both historical and real-time data, making it a versatile tool for various use cases.

Kafka is primarily used to build real-time streaming data pipelines and applications that adapt to the data streams. It combines messaging, storage, and stream processing to allow storage and analysis of both historical and real-time data.

What is Kafka used for?

Apache Kafka is an open-source distributed event streaming platform that serves various purposes.

Apache Kafka is composed of six key components – Producers, Consumers, Topics, Partitions, Brokers, and Zookeeper. Each of these elements plays a vital role in Kafka’s ability to handle high-throughput, fault-tolerant data ingestion, processing, and storage across distributed systems. Understanding how these components work together forms the foundation for effectively leveraging Kafka’s capabilities to power real-time applications, microservices, and analytics pipelines.

What is Kafka Used For

- Producer: A producer generates a large amount of data and writes this into Kafka.

- Consumer: Consumers act as end-users that read data from Kafka that comes from producers.

- Topic: A topic is a category or label on which records are stored and published. All Kafka records coming from producers are organized into topics. Consumer applications read from topics.

- Brokers: These are the Kafka servers that handle the data. Kafka brokers receive messages from producers and store them in their data.

- Partition: This is a unit of data storage. It’s a sequence of messages that is stored in a log and is identified by a unique ID, known as the partition offset. Each partition is ordered and immutable, meaning that once a message has been written to a partition, it cannot be modified or deleted. A topic can have multiple partitions to handle a larger amount of data.

- Zookeeper: This is a centralized service that is used to coordinate the activities of the brokers in a Kafka cluster. It is responsible for maintaining the list of brokers in the cluster and facilitating leader elections for partitions.

Apache Kafka is used by a wide range of companies and organizations across various industries that need to build real-time data pipelines or streaming applications. Developers with a strong understanding of distributed systems, data streaming techniques, and good programming skills should become familiar with Apache Kafka.

It is written in Java and provides client libraries for other languages like C/C++, Python, Go, Node.js, and Ruby. The primary roles that work with Apache Kafka within organizations are software engineers, data engineers, machine learning engineers, and data scientists.

In the context of what is Kafka used for, here are sex point to consider:

- Real-Time Data Processing: Kafka is commonly used to process real-time streams of data, such as customer interactions (e.g., purchases, browsing behavior, and product reviews) on e-commerce platforms.

- Financial Services: In financial services, Kafka helps handle real-time data from stock market feeds, transactions, and risk analysis.

- Internet of Things (IoT): Kafka can ingest and process data from IoT devices, enabling real-time monitoring, analytics, and control.

- Telecommunications: Kafka is used for call detail record (CDR) processing, network monitoring, and fraud detection in telecom networks.

- Social Media: Social media platforms leverage Kafka to manage real-time data streams, such as user posts, likes, and comments.

- Event-Driven Architectures: Kafka is a fundamental component for building event-driven architectures, where events trigger actions or updates across different services or systems.

Why Kafka is used!

Kafka is used by over 100,000 organizations across the world and is backed by a thriving community of professional developers, who are constantly advancing the state of the art in stream processing together. Due to Kafka’s high throughput, fault tolerance, resilience, and scalability, there are numerous use cases across almost every industry, from banking and fraud detection to transportation and IoT.

What is Apache Kafka used for?

Apache Kafka is a versatile distributed streaming platform that is used for a wide range of applications and use cases, including:

Real-Time Data Ingestion and Processing:

- Handling high-volume, high-velocity data streams from sources like IoT devices, web/mobile applications, and sensors

- Enabling the creation of real-time data pipelines and event-driven architectures

Building Scalable, Fault-Tolerant Log-Based Systems:

- Storing and managing large volumes of data as fault-tolerant, distributed logs

- Supporting use cases like database change data capture (CDC) and event sourcing

Powering Microservices and Distributed Applications:

- Facilitating communication and integration between microservices

- Decoupling services and enabling asynchronous, event-driven interactions

Data Warehousing and Analytics:

- Streaming data into data lakes and warehouses for downstream processing

- Enabling near-real-time data replication and ETL pipelines to support business intelligence and predictive analytics

Robust Message Queueing and Pub/Sub Messaging:

- Providing queue-like message delivery guarantees

- Supporting reliable, scalable pub/sub messaging patterns for use cases like asynchronous task queues and event notification systems

What does Apache Kafka do?

Apache Kafka is a distributed streaming platform that enables high-throughput, fault-tolerant data ingestion, processing, and storage. It powers real-time applications, microservices, and analytics pipelines by providing a scalable, reliable message queue and pub/sub-messaging system across organizations and industries.

Use case for Kafka

Let’s understand what is Kafka used for in E-Commerce Order Processing.

In an e-commerce platform, Kafka can be used to power the order processing pipeline:

- When a customer places an order on the website, the order details are published as an event to a Kafka topic.

- A Kafka consumer service picks up the order event and begins processing it, performing tasks like:

- Validating the order

- Checking inventory and reserving products

- Triggering the payment transaction

- Notifying the customer

- Other downstream services subscribe to the order topic to perform additional actions, such as:

- Updating the customer’s order history

- Sending order confirmation emails

- Triggering shipment logistics

- Kafka’s distributed, fault-tolerant architecture ensures that order data is reliably processed, even in the face of high traffic or component failures.

- The event log in Kafka also enables easy replay and analysis of order events for business intelligence, fraud detection, and other purposes.

What is Kafka used for example?

Here are some examples of Kafka in use:

-

Website Activity Tracking: Kafka can be used to track user activity on a website by setting up real-time publish-subscriber feeds. For example, when a user clicks on a button, the web application can publish a message to a topic.

- Fraud Detection: Banks can use Kafka to monitor for fraud by sending every credit card transaction to Kafka. Based on the frequency and location of transactions, Kafka can help banks decide whether to suspend a card or alert the user and fraud department.

-

Internet of Things: Kafka can act as a central hub for collecting and distributing data from multiple IoT devices. Kafka can automatically scale up or down if the number of devices changes without affecting any systems.

Benefits and What is Kafka used for?

What is Kafka Used For

-

High Performance: Kafka helps platforms process messages at very high speeds, exceeding 100,000 messages per second with low latency. It maintains stable performance even under extreme data loads, handling terabytes of messages stored in a partitioned and ordered fashion.

- Scalability: Kafka is a distributed system that can handle large volumes of data and scale quickly without downtime. It achieves scalability by allowing partitions to be distributed across different servers.

- Fault Tolerance: Kafka is a distributed system consisting of multiple nodes running together as a cluster. This distribution makes the system resistant to node or machine failures within the cluster.

- Durability: Kafka is highly durable, as the messages are persisted on disk as quickly as possible.

- Easy Accessibility: Data stored in Kafka is easily accessible to anyone, as all the data from producers is centralized in the system.

-

Eliminates Multiple Integrations: Kafka eliminates the need for multiple data source integrations, as all a producer’s data goes through Kafka. This reduces complexity, time, and cost compared to managing disparate integrations.

What is Kafka used for in microservices?

Apache Kafka is an excellent fit for microservices architectures for several key reasons. Kafka can act as a central event hub, allowing different microservices or applications to publish and subscribe to events. This enables asynchronous communication, loose coupling, and scalability between the microservices. The publish-subscribe model of Kafka allows microservices to communicate asynchronously, reducing the need for tight coupling and synchronous calls between services. Kafka’s distributed, scalable architecture also allows the event hub to scale independently from the microservices, enabling the overall system to handle increasing loads without disruption.

Additionally, Kafka’s capabilities for real-time data streaming and processing make it useful for powering various real-time applications, such as fraud detection, real-time analytics, and monitoring systems. By leveraging Kafka as the central nervous system for a microservices architecture, organizations can build highly scalable, fault-tolerant, and loosely coupled distributed systems that are capable of handling high-throughput, real-time data flows.

- Scalability: Services can scale easily for high-volume event processing

-

Fault Tolerance: Kafka can help avoid bottlenecks that monolithic architectures with relational databases might run into

- High Availability: Kafka can help minimize outages

Kafka plays a key role as a central data backbone in microservices architectures, enabling real-time data synchronization between the different services. By allowing services to publish events representing state changes, and other services to consume and react to those events, Kafka facilitates asynchronous, event-driven communication between the microservices.

What is Kafka used for in Java?

Apache Kafka is used in Java to build applications and microservices that store input and output data in Kafka clusters. Kafka combines messaging, storage, and stream processing capabilities, allowing for the storage and analysis of both historical and real-time data within these Java-based applications and microservices.

Here are the key use cases in the context of what is Kafka used for:

- Real-time Data Pipelines: Kafka can be used to build real-time streaming data pipelines and applications that can adapt to the data streams.

- Big Data Collection: Kafka can be used to collect large amounts of data and perform real-time analysis.

- Event Streaming: Kafka can feed events to complex event streaming systems, IFTTT, and IoT systems.

- Microservices: Kafka can be used in conjunction with in-memory microservices for added durability, and also to decouple system dependencies in microservice architectures.

- Messaging: Kafka can replace traditional message brokers and has better throughput, built-in partitioning, replication, and fault tolerance.

- Website Activity Tracking: Kafka can rebuild a user activity tracking pipeline as a set of real-time publish-subscribe feeds.

-

Metrics: Kafka can aggregate statistics from distributed applications to produce centralized feeds of operational data.

What is Kafka used for in DevOps?

Here are the use cases in the context of what is Kafka used for.

- Microservices Communication: Kafka enables asynchronous, event-driven communication between microservices, which is a key architectural pattern in modern DevOps-driven application development.

- Monitoring and Observability: Kafka can be used to collect and stream log data, metrics, and other telemetry from across the DevOps infrastructure. This data can then be used for monitoring, alerting, and building observability dashboards.

- Continuous Integration/Continuous Deployment (CI/CD): Kafka can be integrated into CI/CD pipelines to trigger builds, tests, and deployments based on events published to Kafka topics.

- Event-Driven Automation: Kafka enables event-driven automation, where infrastructure and application changes can trigger appropriate actions and responses throughout the DevOps toolchain.

- Data Pipelines: Kafka can be used to build real-time data pipelines to move data between different systems, databases, and analytics tools in the DevOps ecosystem.

- Scalability and Fault Tolerance: Kafka’s distributed, fault-tolerant architecture makes it well-suited for powering mission-critical DevOps workflows that need to be highly scalable and resilient.

- DevOps Toolchain Integration: Kafka can integrate with a wide range of DevOps tools like Prometheus, Elasticsearch, Splunk, and others to enable end-to-end data flows and observability.

The use of Apache Kafka in modern distributed systems and DevOps environments to enable scalable, fault-tolerant, and real-time data streaming, messaging, and event-driven communication between microservices, applications, and infrastructure components.

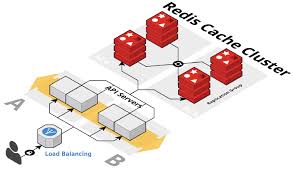

What is Redis used for?

What is Kafka Used For

Redis is an open-source, in-memory data structure store that is used for a variety of purposes including:

- Caching: Redis can be used to cache web pages, which can reduce server load and improve page loading times. It’s also well-suited for storing chat messages.

- Session Management: Redis can be used to store session data.

- Real-time Analytics: Redis’s key-value store structure makes it efficient for real-time analytics.

- Message Broker: Redis can be used to facilitate communication between different parts of an application.

-

Live Streaming: Redis can store metadata about users’ profiles and viewing histories, as well as authentication information for millions of users. It can also store manifest files, which allow Content Delivery Networks (CDNs) to stream films to many users simultaneously.

When to use Redis?

- Redis is often used for caching web pages, reducing load on servers, and improving page loading times.

- It can be used as a message broker to facilitate communication between different parts of an application.

- Additionally, Redis supports transactions, making it possible to execute multiple operations atomically.

Why use Redis?

Redis is the world’s fastest in-memory database. It provides cloud and on-prem solutions for caching, vector search, and NoSQL databases that seamlessly fit into any tech stack—making it simple for digital customers to build, scale, and deploy the fast apps our world runs on.

What is Kafka Used For: Unleash the Power of Data Streaming

Read More: